Out-smarting

the system

The Seed Is Planted: TessOCR

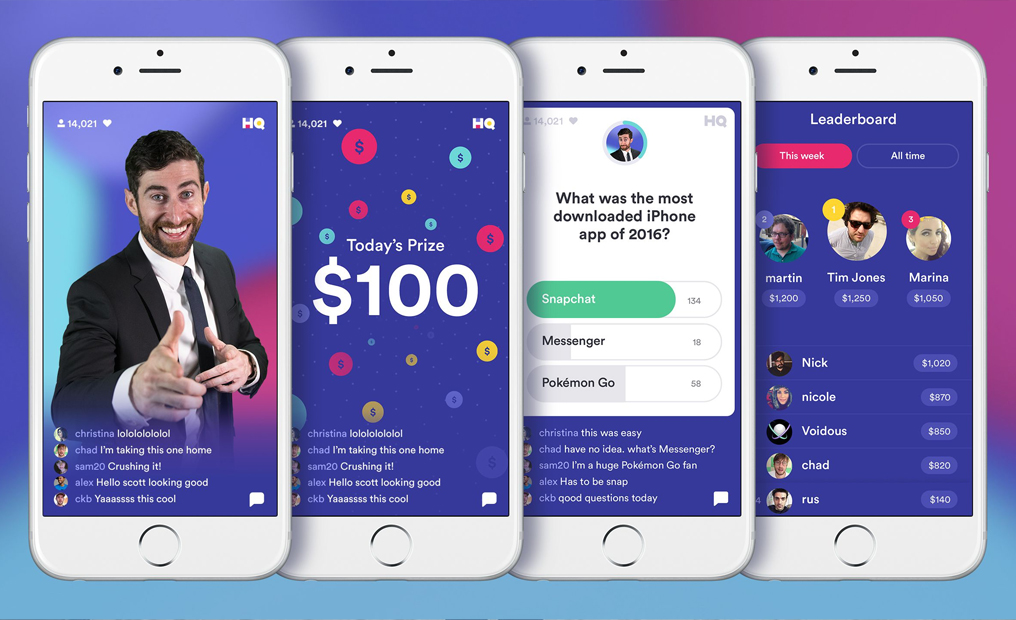

In my Freshman year of college, I was introduced to the popular

game HQ Trivia, a live quiz show used by millions of people daily

that pays users who get 12/12 questions correctly. Each question

is typically something obscure and you have 10 seconds to choose

from 3 options. A week later, I decided to try and beat it.

Disclaimer: I in no way endorse cheating, I created the application

as an experiment and a proof of concept.

It was a Thursday night and I had just gotten back from having

classes from 8am to 6pm, and I was exhausted. Shortly thereafter, HQ

Trivia's show went live and I opened it up. I am by no means a

jeopardy god, but while playing the game, I realized that there was

a way I could beat it. I'd beat it with Java.

I immediately got to work designing a Java application I called

TessOCR. The premise was fairly simple. Using a Java wrapper on the

Open Source Tesseract library, I was able to write an application

that used Optical Character Recognition (OCR) on a user defined

portion of the screen. Upon detecting the text, the application

would automatically use Google to find the result. Thanks to

Quicktime, I could render my phone screen on my laptop and then use

OCR on that. In order to detect the portion of the screen to use,

Tess runs as a semi-transparent white overlay (10% opaque so quite

see-through) on the left half of the screen. By putting Quicktime on

that half, I could click two points on the white overlay to define

the "Active Area" which would persist until closing the application.

Hitting the space key triggers capturing a BufferedImage of the

"Active Area" and uses Tesseract to detect the text and Google to

find the result within a couple seconds, bringing up a page that

displayed the relevant results with the answer choices highlighted

on the page for easy viewing.

I tested my application first with screenshots from old games, and

then with youtube videos of them. I then tested it by spectating

live on the quiz shows and with it was able to find the answers for

10 or 11 out of 12 questions fairly consistently, meaning

potentially winning $1-5 per game per day.

OCR is

lame

The New And Improved: HolmesQ

First, OCR is kind of terrible. Tesseract is more or less ancient,

and while it is an impressive piece of technology, it ultimately

is prone to error and a bit slow for accurate results. Enter the

web socket.

If data was being streamed to my phone live with questions and

answers, then theoretically that data could be intercepted. This is

what I set out to do. First, using mitmproxy, I acted as MITM

(Man-In-The-Middle) with my phone and the internet and opened the

app. Immediately I was able to read a very curious piece of JSON

that was sent when the game was offline. The interesting part about

the JSON was this element called "broadcast" which was set to null.

My suspicion was then that if I queried the same URL during a live

game, setting the appropriate headers, I would receive JSON with a

"broadcast" object containing meaningful data. So, I started working

on a quick C++ application to do just that.

Sure enough, once I queried the HQ server, I received a "broadcast"

object containing a new piece of information, an interesting URL

which was referred to as the "socketURL". Familiar with the concept

of a web socket, I had a feeling I should connect to this live

broadcast socket and would receive relevant data streamed in an

alive connection. This is when I ran into the first problem:

connecting to a socket in C++ was a bit of a pain.

For most cases, this would be a point where you turn around and

start using a different language. However I was in a class where our

projects had to use a C++ framework, so my hands were tied. So, I

came up with a work around: writing cross-languages. I was familiar

with how to connect to a web socket in Swift, and since I am

developing on a Mac, I decided to write my program in multiple

languages. The primary application is written in C++, using an

Objective-C++ bridge to call a Swift web socket library where I

process the incoming data and send it back to the C++ code. Sure

enough, once I connected to the web socket, I was hit by a constant

stream of JSON data, way too fast to read everything. So, I wrote a

filter to only print out the incoming traffic that contained the

word "question" case-insensitive and I was now able to see questions

and answers, nicely packaged in JSON.

Next I created the interface to render the questions and answer

choices and connected everything to use various API's including the

google custom search and wikipedia. By doing basic statistical

analysis on all the results and some word processing on the

questions and answers, the bot was able to generate confidence

levels in each choice. This extended to being able to handle

negations and select which option doesn't apply in negated

questions.

This bot was capable of automatically detecting a live game,

entering the game pretending to be a real user, and then finding the

answer for each question in under a second.